Blog / AI and Ethics in Decentralized Data Use

AI and Ethics in Decentralized Data Use

Decentralized AI is reshaping how organisations handle data by keeping it local while enabling collaboration, but this shift introduces ethical concerns. Key challenges include bias, lack of transparency, and privacy risks. For example, federated learning can amplify biases when local data is inconsistent, and privacy isn’t always guaranteed due to risks like gradient inversion attacks. To address these, organisations must adopt frameworks that ensure accountability, transparency, and privacy while balancing localised data control with compliance. Practical solutions include dynamic consent models, bias audits, and privacy-preserving technologies like differential privacy. The right governance approach - centralised, decentralised, or hybrid - depends on balancing flexibility, security, and accountability.

Key Points:

- Decentralized AI systems improve data privacy but risk amplifying bias and reducing transparency.

- Federated learning shares model updates, not raw data, but privacy risks like data reconstruction remain.

- Ethical AI frameworks, such as Wick’s Four Pillar Framework, help integrate accountability and responsible practices.

- Tools like SHAP, LIME, and blockchain-based smart contracts enhance transparency and trust.

- In the UAE, compliance with strict local data laws is critical for organisations adopting AI.

Quick Takeaway: Balancing innovation with ethical responsibility requires clear frameworks, regular audits, and privacy-focused technologies to build trust and ensure compliance.

HumanAIx: Ethics, Data Ownership & the Future of Decentralized AI

Ethical Problems in AI-Powered Decentralized Data Systems

When AI operates within decentralised networks, it offers privacy benefits but also introduces ethical challenges related to bias, transparency, and accountability. Here's a closer look at these issues.

How Distributed AI Systems Amplify Bias

Decentralised AI systems face a tricky problem: data across nodes is often inconsistent or non-uniform (non-IID), which can lead to client drift. This means local models may diverge, resulting in a biased global model.

For example, high-resource institutions - like well-funded hospitals in Dubai or Abu Dhabi - often dominate such networks. Their data shapes the models to fit their specific demographics, leaving underrepresented groups on the sidelines. This local scenario reflects a global issue in decentralised networks. As Shahnila Rahim, Assistant Professor at Noroff University College, points out:

"Federated learning does not eliminate bias; it can, in fact, amplify it".

Bhavya Jain, a cybersecurity expert, further warns:

"A model trained on biased or incomplete data is not just a fairness issue. It can create discriminatory outcomes that breach legal and ethical boundaries".

The risks are especially serious in sectors like healthcare, hiring, and finance. These models may perform well for dominant groups but fail to serve marginalised populations, leading to real-world consequences.

Lack of Transparency in AI Decision-Making

Another ethical challenge is the lack of transparency in AI decisions. Many deep learning models act like "black boxes", making it hard to understand their decision-making processes. This lack of clarity also makes it difficult to assign accountability when things go wrong, especially in multi-institutional networks. As Rahim asks:

"In a multi-institutional federated consortium, responsibility for errors is diffuse. If a patient is harmed by a flawed model, is accountability borne by the local institution that trained part of the model, the coordinating server, or the consortium as a whole?".

This issue is further complicated by governance silos. Privacy, security, and legal teams often work in isolation, creating blind spots throughout the AI development lifecycle.

Privacy vs. Utility in Decentralised Data Sharing

Decentralised systems also face a tough balancing act between privacy and AI utility. Federated learning keeps raw data local, sending only model updates to a central server. However, this does not guarantee absolute privacy. Techniques like gradient inversion attacks can reverse-engineer these updates, exposing sensitive information.

This challenge is particularly pressing in industries like marketing, where organisations need detailed customer insights while complying with data protection laws such as the UAE's Personal Data Protection Law. Technologies like differential privacy or homomorphic encryption can reduce these risks, but they often come at the cost of accuracy.

Rahim sums up the dilemma:

"The central challenge is clear: how can we harness the collective power of biomedical data without compromising privacy, equity, or trust?".

This question is just as relevant to marketing data, where businesses must find a balance between personalisation and protecting user privacy. Static consent forms are increasingly being replaced by dynamic consent models, which keep participants informed about changing risks.

Solutions Through Coordinated Data Sharing and Decentralised AI

Coordinated data sharing offers a direct way to tackle challenges like bias, lack of transparency, and privacy risks. By combining careful data-sharing strategies with robust privacy measures, organisations can maintain the balance between safeguarding privacy and ensuring the quality of data needed for their operations.

The 'As Little as Possible, As Much as Necessary' Principle

This principle aims to address a critical question: how can privacy be balanced with delivering high-quality services? Currently, an overwhelming 90% of people sacrifice their privacy without receiving enough value in return. The problem isn’t just about individual decisions - it’s also about the absence of a coordinated approach.

Evangelos Pournaras, Associate Professor at the University of Leeds, highlights this challenge:

"To achieve a minimum quality of service for a population of individuals whilst maximising their privacy, a collective arrangement (i.e. coordination) of their data-sharing decisions is required to minimise both excessive and insufficient levels of data sharing".

One solution is deploying decentralised AI assistants on user devices. These tools automatically adjust data-sharing settings, ensuring that only the necessary information is shared. The impact of this approach is impressive: coordinated sharing restores an average of 77% of privacy compared to traditional data-sharing models. At the same time, it reduces data-collection costs for service providers by 10.7% to 32.9%.

This also addresses the "mismatch" issue - the gap between the data shared and the data actually needed. By treating personal data as a limited resource and coordinating its use across a "data collective", organisations can achieve accurate outcomes with far less data. For example, smart city traffic systems can produce precise density estimates with 50% less data than what is currently required through default GPS sharing.

By making data-sharing decisions more precise, organisations can focus on strict privacy measures and clear accountability.

Privacy and Accountability in Decentralised AI

Coordinated data sharing must go hand in hand with robust privacy and accountability frameworks. Protecting sensitive data while ensuring responsibility requires a dual focus. By keeping data on local devices, techniques like differential privacy safeguard against threats such as gradient inversion attacks.

The concept of "explicability" plays a key role in bridging understanding and accountability. As Professor Luciano Floridi and researcher Josh Cowls explain:

"Explicability, understood as incorporating both the epistemological sense of intelligibility (as an answer to the question 'how does it work?') and in the ethical sense of accountability (as an answer to the question: 'who is responsible for the way it works?')".

To enforce accountability, organisations can establish clear governance roles and use blockchain-based smart contracts. These contracts can automate the tracking of contributions and ensure transparency. Additionally, dynamic consent models actively inform participants of any changes in data risks, keeping them updated and engaged.

sbb-itb-058f46d

Applying Ethical AI Frameworks in Marketing Technology

Using Decentralised AI for Data Collectives

A striking 42% of organisations report a lack of sufficient proprietary data for AI customisation. Decentralised AI offers a solution, enabling collaboration across data sources without exposing raw customer data. In marketing, techniques like federated learning allow organisations to work together while safeguarding sensitive information.

In October 2025, the UAE Ministry of Human Resources and Emiratisation introduced "Eye", an AI-powered system designed to streamline work permit processing. This system uses intelligent document verification for passports and academic credentials, all while maintaining centralised oversight to ensure compliance.

However, privacy concerns remain a significant roadblock. 40% of enterprises cite privacy and confidentiality as major obstacles to adopting generative AI. For marketing teams in the UAE, this challenge is compounded by data sovereignty laws that require sensitive data to be processed and stored within the country. Addressing these issues demands a focus on transparency and minimising bias.

Building Transparency and Reducing Bias

While 79% of executives highlight the importance of AI ethics, fewer than 25% actually implement governance principles into practice. This disconnect between intention and action creates risks for marketing systems relying on AI technology.

To build transparency, organisations can adopt targeted explanations tailored to their audiences. For instance, technical teams might receive detailed insights into model logic, while end-users are presented with easy-to-understand summaries of decision-making processes. Regular bias audits, utilising tools like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-Agnostic Explanations), are essential. Documenting processes through artefacts such as "Model Cards" and "Data Sheets" further ensures clarity by recording data sources, training objectives, and system limitations.

In April 2025, the Dubai Centre for Artificial Intelligence launched the "Dubai AI Seal", a certification system that ranks companies across six tiers (S through E) based on their economic contributions and adherence to ethical AI standards.

Jinane Mounsef, Chair of Electrical Engineering and Computing Sciences at Rochester Institute of Technology in Dubai, underscores the importance of accountability:

"Responsible AI should be explainable, fair and privacy-conscious. The greatest risk in AI is assuming someone else is thinking about the ethics".

By establishing strong ethical frameworks, organisations can embed fairness and transparency into their AI-driven marketing technologies.

Wick's Four Pillar Framework for Ethical Marketing Technology

For businesses in the UAE, aligning with local privacy laws and data sovereignty requirements is critical. Wick's Four Pillar Framework provides a structured approach to embedding ethical AI into marketing technology systems. Here's how it works:

- The Core Foundations pillar sets the groundwork with human-centred design, ensuring AI complements human judgement. This includes "Privacy by Design" protocols and fairness measures to ensure equitable outcomes across diverse audiences.

- The Data Management pillar oversees the entire data lifecycle, ensuring traceability and conducting rigorous checks to prevent biases from creeping into AI systems.

- The AI Risk Management pillar proactively categorises AI systems by risk levels and enforces conformity assessments for high-risk applications.

- The AI Literacy pillar focuses on equipping marketing teams with the skills to critically evaluate AI outputs and integrate ethical practices into their daily operations.

For UAE enterprises, this framework supports privacy-preserving techniques like federated learning, secure multiparty computation, and differential privacy. These methods minimise data exposure during collaborative processing. With projections showing that AI could contribute nearly 14% to the UAE's GDP by 2030 - equivalent to approximately AED 356 billion - embracing ethical AI frameworks is not just a responsible choice but a strategic necessity for sustainable growth.

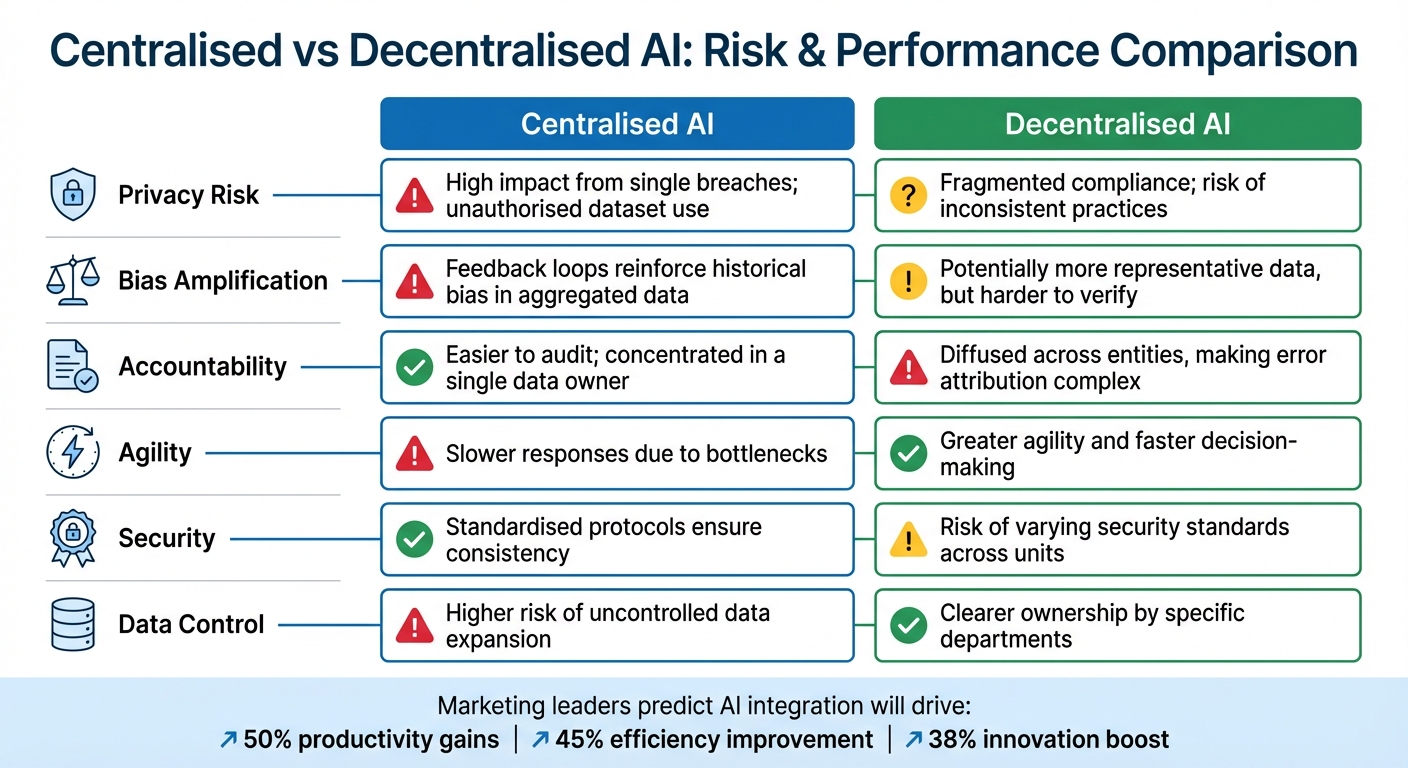

Risk Comparison: Centralised vs. Decentralised AI in Marketing Technology

Centralised vs Decentralised AI Systems: Privacy, Bias, and Accountability Comparison

When deciding between centralised and decentralised AI systems, businesses must weigh the trade-offs in privacy, bias, and accountability. Centralised AI consolidates data into a single repository, which can act as a "single point of failure." If breached, the consequences can be severe. For example, the French Competition Authority fined Google €250 million for using publisher content to train its Bard model without proper notification, highlighting how centralised systems can misuse large datasets without consent.

On the other hand, decentralised AI spreads data across multiple nodes, lowering the risk of large-scale breaches. However, this setup comes with its own set of challenges. Individual departments may lack the specialised expertise of a centralised IT team, potentially leading to inconsistent security standards. Moreover, accountability becomes more complex. If a model produces biased results, it can be difficult to determine whether the issue stems from a local node, the coordinating server, or the broader consortium, as previously discussed.

Centralised systems provide uniform compliance protocols, while decentralised models are more agile and can adapt quickly to market changes. Striking the right balance between governance and flexibility is key. A hybrid approach - combining centralised oversight for compliance with decentralised execution for localised data ownership - can offer a practical solution.

Comparison Table: Centralised AI vs. Decentralised AI

| Feature | Centralised AI | Decentralised AI |

|---|---|---|

| Privacy Risk | High impact from single breaches; unauthorised dataset use | Fragmented compliance; risk of inconsistent practices |

| Bias Amplification | Feedback loops reinforce historical bias in aggregated data | Potentially more representative data, but harder to verify |

| Accountability | Easier to audit; concentrated in a single data owner | Diffused across entities, making error attribution complex |

| Agility | Slower responses due to bottlenecks | Greater agility and faster decision-making |

| Security | Standardised protocols ensure consistency | Risk of varying security standards across units |

| Data Control | Higher risk of uncontrolled data expansion | Clearer ownership by specific departments |

Marketing leaders predict that AI integration will drive productivity gains by 50%, improve efficiency by 45%, and boost innovation by 38%. For organisations leaning toward decentralised models, continuous auditing tools are essential to detect bias without exposing raw local data. Meanwhile, those opting for centralised systems should focus on data minimisation - collecting only the information necessary for specific campaigns. Finding this balance between governance and flexibility is crucial for building ethical, accountable AI systems in marketing technology.

Conclusion

Balancing accountability with progress is at the core of ethical AI in decentralised systems. While 79% of executives acknowledge the importance of AI ethics, fewer than 25% have enforceable governance in place. This stark contrast between intent and action stresses the need for frameworks that translate ideals into real-world practices. As highlighted earlier, addressing this gap requires moving beyond corporate rhetoric to implement structured, actionable strategies.

Decentralised AI offers benefits like enhanced privacy and reduced bias but also introduces complexities in ensuring accountability. To tackle these challenges, organisations can adopt a risk-based governance model. This involves categorising AI systems by their functionality and potential impact, appointing ethics focal points within each unit, and conducting regular privacy impact assessments. Tools like differential privacy and encryption can further strengthen these efforts. Such measures align ethical considerations with practical solutions, paving the way for responsible and sustainable AI development.

"The question is no longer whether AI should be regulated, but how to strike the right balance between innovation and control." – Eliza Lozan, Partner, Deloitte Middle East

In marketing technology, targeted frameworks provide clear steps to integrate ethical principles. Wick's Four Pillar Framework - comprising Build & Fill, Plan & Promote, Capture & Store, and Tailor & Automate - ensures transparent data practices, accountable decision-making, and fair personalisation. This directly addresses pressing issues: 45% of business leaders cite data accuracy or bias as barriers to AI adoption, while 40% highlight privacy concerns.

The shift from voluntary guidelines to enforceable regulations, such as the EU AI Act and the UAE Charter for AI, signals a new phase where ethical AI is no longer optional. To navigate this evolving landscape, organisations must establish AI Ethics Boards, conduct regular bias audits, and maintain comprehensive documentation. These steps are essential for building trust and ensuring compliance in an increasingly regulated environment.

FAQs

What are the privacy and bias implications of decentralised AI?

Decentralised AI brings a major advantage when it comes to data privacy. By enabling organisations to share information securely without needing to centralise sensitive data, it significantly lowers the chances of large-scale data breaches. It also aligns with data sovereignty requirements, making it easier for organisations to comply with local regulations. That said, ensuring consistent privacy standards across a variety of systems can be a tough nut to crack.

On the topic of bias, decentralised AI has the potential to minimise it by pulling insights from a wide range of data sources and distributing decision-making across multiple systems. However, uneven data quality or varying governance practices in different regions can unintentionally worsen bias. To mitigate these risks, strong oversight and well-defined ethical guidelines are absolutely necessary.

What ethical concerns arise in federated learning systems?

Federated learning brings with it a set of ethical challenges that demand thoughtful attention. One major concern is data privacy - even though data stays local, sensitive information might still be exposed unintentionally during collaborative processes. Another pressing issue is algorithmic bias. When AI models are trained on data that isn’t balanced or representative, the results can be skewed, leading to unfair or even discriminatory outcomes.

The decentralised nature of federated learning also raises questions about accountability. Who takes responsibility when errors or biases occur? This lack of clarity can complicate efforts to ensure ethical practices. On top of that, ensuring fair participation among all contributors and navigating regulatory compliance across various jurisdictions adds another layer of complexity.

To tackle these issues, organisations must prioritise strong governance frameworks, maintain transparency in decision-making, and conduct regular audits. These steps are essential for promoting responsible and ethical AI practices.

What steps can organisations take to ensure AI systems are transparent and accountable?

To maintain transparency and accountability in AI systems, organisations can implement strong governance frameworks that emphasise ethical principles like fairness, openness, and human oversight. Conducting regular audits and closely monitoring the performance of AI systems can help uncover and correct biases or errors in decision-making.

Encouraging a culture of openness is equally important. Clearly explaining how AI systems operate and make decisions can go a long way in building trust with stakeholders. Involving diverse teams in the design and evaluation processes of AI solutions is another key step. This approach not only reduces bias but also promotes inclusivity in the outcomes.